This article will focus on a walkthrough for principal component analysis in Python.

[the_ad id=”3031″]Table of Contents

- Introduction

- Principal component analysis (Overview)

- Principal component analysis in Python

- Relationship of principal components to the original dataset

- Explained variance of principal components

- Conclusion

Introduction

One of the main reasons for writing this article became my obsession to know the details, logic, and mathematics behind Principal Component Analysis (PCA). Majority of the online tutorials and articles about principal component analysis in Python today focus on showing learners how to apply this technique and visualize the results, rather than starting from the very beginning as to why do we even need it in the first place? What is it with our data that we need to shrink the number of features or group them?

Let’s start from the beginning. What are you going to do with the dataset you have even if you don’t do any dimensionality reduction? I guess you are trying to feed it to a machine learning algorithm right?

So that’s our first step. Our goal is to have a algorithm-friendly dataset. What do we mean by that?

When you have a lot of features, there are a few potential drawbacks:

- Your model will have a high degree of complexity

- They may cause a significant amount of noise

- If they have different scales, it reduces the performance of several algorithms that are scale-sensitive

- More complicated visualizations in n-Dimensional space

Here is where PCA comes into play. It will help us reduce the dimensionality of the dataset by extracting/eliminating the important/unimportant features (which is used in Word2Vec models).

[the_ad id=”3031″]Principal Component Analysis (Overview)

Principal component analysis (or PCA) is a linear technique for dimensionality reduction. Mathematically speaking, PCA uses orthogonal transformation of potentially correlated features into principal components that are linearly uncorrelated.

As a result, the sequence of n principal components is structured in a descending order by the amount of the variance in the original dataset they explain. What this essentially means is that the first principal component explains more variance then the second principal component and so on.

It is much better to put it into context of how principal components are calculated step by step:

- Calculate the covariance matrix

- Calculate eigenvalues

- Calculate eigenvectors

- Sort eigenvectors according to eigenvalues in decreasing order

- Transform original data to k-dimensional using k eigenvectors

Note: as mentioned before, depending on the data you are working with and the scaling of features, you may want to standardize the data before running PCA on it.

[the_ad id=”3031″]Principal component analysis in Python

To continue following this tutorial we will need two Python libraries: pandas, numpy, sklearn, and matplotlib.

If you don’t have them installed, please open “Command Prompt” (on Windows) and install them using the following code:

pip install pandas

pip install numpy

pip install sklearn

pip install matplotlib

Import the required libraries:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from sklearn.datasets import load_wine

from sklearn.preprocessing import StandardScaler

from sklearn.decomposition import PCA

Once the libraries are downloaded, installed, and imported, we can proceed with Python code implementation.

Step 1: Load the dataset

In this tutorial we will use the wine recognition dataset available as a part of sklearn library. This dataset contains 13 features and target being 3 classes of wine.

The description of the dataset is below:

- Alcohol

- Malic acid

- Ash

- Alcalinity of ash

- Magnesium

- Total phenols

- Flavanoids

- Nonflavanoid phenols

- Proanthocyanins

- Color intensity

- Hue

- OD280/OD315 of diluted wines

- Proline

This dataset is particularly interesting to illustrate classification problems as well as it is great for our showcase of PCA application. It has enough features to see the true benefit of dimensionality reduction.

wine = load_wine()

df = pd.DataFrame(wine.data, columns=wine.feature_names)

print(df.iloc[:,0:4].head())

Output:

alcohol malic_acid ash alcalinity_of_ash

0 14.23 1.71 2.43 15.6

1 13.20 1.78 2.14 11.2

2 13.16 2.36 2.67 18.6

3 14.37 1.95 2.50 16.8

4 13.24 2.59 2.87 21.0

Data from sklearn, when imported (wine), appear as container objects for datasets. It is similar to a dictionary object. Then we convert it to a pandas dataframe and use the feature names as our column names.

Since we have 13 features, it will be very wide to show, so we take a look at the first 4 columns just to make sure that our code worked.

Step 2: Exploring the dataset

Now let’s take a look at the basic descriptive statistics of this dataframe. We will continue to just display the first 4 columns to save some space in the output.

print(df.iloc[:,0:4].describe())

Output:

alcohol malic_acid ash alcalinity_of_ash

count 178.000000 178.000000 178.000000 178.000000

mean 13.000618 2.336348 2.366517 19.494944

std 0.811827 1.117146 0.274344 3.339564

min 11.030000 0.740000 1.360000 10.600000

25% 12.362500 1.602500 2.210000 17.200000

50% 13.050000 1.865000 2.360000 19.500000

75% 13.677500 3.082500 2.557500 21.500000

max 14.830000 5.800000 3.230000 30.000000

Below is a scatter plot matrix for the 4 features we have been working with above. It is of a smaller size on the blog page so it doesn’t reduce the page load time. If you would like to explore it in depth, you can easily recreate it with a few parameter tweaks.

What you see in this scatterplot matrix is a visualization of the descriptive statistics output. We will use it to compare with the scaled data to show that the distribution and the spread of the values for each feature remained the same after standardization.

As we can see, the ranges of each feature are quite different, along with their means and variances. Our next step is to scale the data for each feature by subtracting its mean and dividing by its standard deviation for each value.

Step 3: Standardize the dataset

PCA is mainly focused on features that maximize variance. In case of the current not scaled data, PCA will consider that feature 4 (‘alcalinity_of_ash’) dominates the maximum variance metric since its range is 5-10 times larger compared to features 1:3. Hence the resulting principal components can be very different from the standardized data. More detailed information about the importance of scaling for PCA is available here.

When it comes to scaling our data, we will apply the following standardization method:

$$ z_i={x_i – \bar{x} \over \sigma}$$

df = StandardScaler().fit_transform(df)

df=pd.DataFrame(df,columns=wine.feature_names)

Output:

alcohol malic_acid ash alcalinity_of_ash

0 1.518613 -0.562250 0.232053 -1.169593

1 0.246290 -0.499413 -0.827996 -2.490847

2 0.196879 0.021231 1.109334 -0.268738

3 1.691550 -0.346811 0.487926 -0.809251

4 0.295700 0.227694 1.840403 0.451946

When we apply the StandatdScaler() to a dataframe, the resulting transformed object is an array, which we then convert back to a pandas dataframe object. We can see that the scale of the transformed features is more similar across features than in raw data.

To show what this implies, let’s take a look at the descriptive statistics of the scaled data.

print(df.iloc[:,0:4].describe())

Output:

alcohol malic_acid ash alcalinity_of_ash

count 1.780000e+02 1.780000e+02 1.780000e+02 1.780000e+02

mean 7.943708e-15 3.592632e-16 -4.066660e-15 -7.983626e-17

std 1.002821e+00 1.002821e+00 1.002821e+00 1.002821e+00

min -2.434235e+00 -1.432983e+00 -3.679162e+00 -2.671018e+00

25% -7.882448e-01 -6.587486e-01 -5.721225e-01 -6.891372e-01

50% 6.099988e-02 -4.231120e-01 -2.382132e-02 1.518295e-03

75% 8.361286e-01 6.697929e-01 6.981085e-01 6.020883e-01

max 2.259772e+00 3.109192e+00 3.156325e+00 3.154511e+00

Notice that in the scaled data the means of features are centered around 0 and variance is the unit variance.

The matrix scatterplot of the scaled data below visualizes the point mentioned above.

You can recreate it the same way as the scatter plot matrix before. What’s important is to compare the distributions on the features and the spread of the scaled values, notice that those are the same.

Note: if you have categorical data, you will need to label encode it before scaling.

Step 4: Apply principal component analysis in Python

After scaling our data, we are on track to the most interesting part of this tutorial. We will go ahead and apply PCA to the scaled dataset.

pca = PCA(n_components=2)

pca_model=pca.fit(df)

df_trans=pd.DataFrame(pca_model.transform(df), columns=['pca1', 'pca2'])

First, store the instance of sklearn PCA as pca with 2 components. The reason we chose specifically 2 is just for simplicity and presentation in this tutorial. Later in the article we will discuss how to find the number of optimal principal components.

pca_model stores the eigenvectors of the applied technique, which is used to transform the scaled dataset df into df_trans by reducing its shape from 13 original features to 2 features which are represented by principal components.

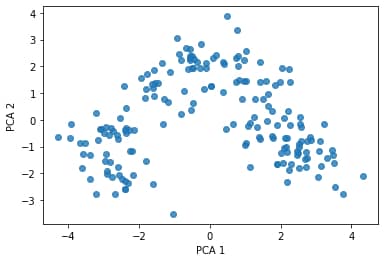

Below is the visualization of this dimensionality reduction (from 13-dimensional to 2-dimensional. Let’s take a look:

print(df_trans.head())

Output:

pca1 pca2

0 3.316751 -1.443463

1 2.209465 0.333393

2 2.516740 -1.031151

3 3.757066 -2.756372

4 1.008908 -0.869831

What we see above is our scaled 13-feature dataset transformed to 2-feature dataset where each feature is a principal component. What it does now is allows us to visualize a 13-dimensional dataset in a 2-dimensional space due to dimensionality reduction.

Visualizing the transformed data df_trans:

plt.scatter(df_trans['pca1'], df_trans['pca2'], alpha=0.8)

plt.xlabel('PCA 1')

plt.ylabel('PCA 2')

plt.show()

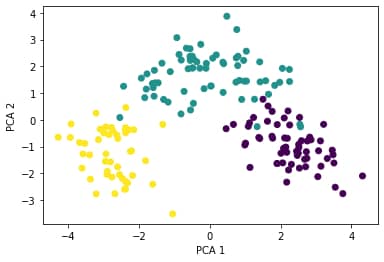

We can add the target values as colour to the above plot (there are three classes: 1, 2, 3) to arrive at the following scatterplot:

After the principal component transformation, the observations are grouped in definable clusters. As an example, if you are building a prediction algorithm for classification like KNN, when applying on the transformed dataset, will produce better accuracy.

[the_ad id=”3031″]Relationship of principal components to the original dataset

First part of the exploration will be the correlation between each principal component and the features from the original dataset.

comp=pd.DataFrame(pca_model.components_, columns=wine.feature_names)

print(comp)

Output:

alcohol malic_acid ash alcalinity_of_ash magnesium \

0 0.144329 -0.245188 -0.002051 -0.239320 0.141992

1 -0.483652 -0.224931 -0.316069 0.010591 -0.299634

total_phenols flavanoids nonflavanoid_phenols proanthocyanins \

0 0.394661 0.422934 -0.298533 0.313429

1 -0.065040 0.003360 -0.028779 -0.039302

color_intensity hue od280/od315_of_diluted_wines proline

0 -0.088617 0.296715 0.376167 0.286752

1 -0.529996 0.279235 0.164496 -0.364903

Each row index with 0 refers to the first principal component. The highest correlation (in absolute terms) between the principal component and the original dataset features is with ‘flavanoids’ feature (0.422934). This means that the wines with a large value for ‘pc1’ in the trans_df will have a high ‘flavinoids’ value as well.

For the second principal component, the highest correlation (in absolute terms) is with ‘color_intensity’ feature (-0.529996) meaning that a wine with a large value for ‘pc2’ in the trans_df will have a low ‘color_intensity’ value.

Following this approach, you get a good gist of understanding how much each principal component’s value is related to the values of a particular dataset feature.

Note: this is not the same as feature importance (yet it will show you similar results as per which features are more important) rather than a correlation overview between the principal components and the original dataset.

[the_ad id=”3031″]Explained variance of principal components

Recall that here we have 2 principal components, where they are linearly uncorrelated. Each of these components explains some variation of the original dataset. The way PCA works is it finds principal components in the descending order by the amount of variation each component explains. Let’s look into that.

print(pca_model.explained_variance_ratio_)

Output:

[0.36198848 0.1920749 ]

From the above, we see that the first principal component explains around 36.2% of the variance of the original dataset, while the second principal component explains around 19.2%.

Recall that in Step 4 when creating the pca instance, we restricted it to 2 components. This was done mainly to showcase the technique and ease the explanations during this tutorial.

In reality, there can be more than 2 principal components, and how many a data scientist would like to use really depends on how much of the variance they would like to have explained. The maximum number of components is restricted by the number of features (in our case it’s 13). To show what we are referring to, we will redo a few steps of his tutorial without restricting the number of principal components to 2.

pca = PCA()

pca_model=pca.fit(df)

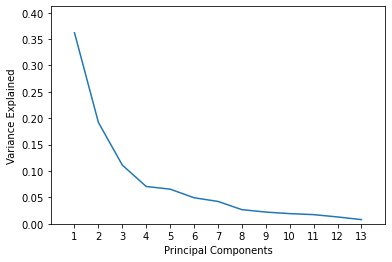

For an N-dimensional dataset, the above code will calculate N principal components. Our wine dataset had 13 features, hence we now have 13 principal components. Let’s take a look at the fraction of the variance each of them explains:

print(pca_model.explained_variance_ratio_)

Output:

[0.36198848 0.1920749 0.11123631 0.0706903 0.06563294 0.04935823

0.04238679 0.02680749 0.02222153 0.01930019 0.01736836 0.01298233

0.00795215]

Similar values for the first and second component as we had before. Now we just added 11 more of them and they all appear in descending order.

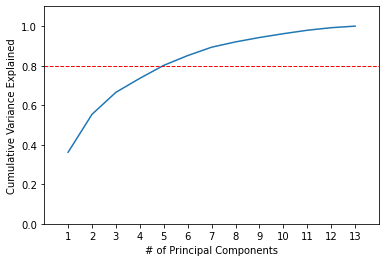

An important factor how we choose how many principal components we need to reduce our dataset to is the cumulative variance explained by them. For each subsequent principal component we will add the variance it explains plus all of the variance explained by preceding principal components.

print(np.cumsum(pca_model.explained_variance_ratio_))

Output:

[0.36198848 0.55406338 0.66529969 0.73598999 0.80162293 0.85098116

0.89336795 0.92017544 0.94239698 0.96169717 0.97906553 0.99204785

1. ]

The first two principal components explain around 55.41%. As we add more principal components, the cumulative variance increases at a decreasing rate.

Assume, as a data scientist, your requirement is that 80% of the variance must be explained in the PCA transformed data. This level of cumulative variance is explained by including 5 principal components. Let’s visualize it.

plt.plot(list(range(1,14)), pca.explained_variance_ratio_)

plt.axis([0, 14, 0, max(pca.explained_variance_ratio_)+0.05])

plt.xticks(list(range(1,14)))

plt.xlabel('Principal Components')

plt.ylabel('Variance Explained')

plt.show()

plt.plot(list(range(1,14)), np.cumsum(pca.explained_variance_ratio_))

plt.axis([0, 14, 0, 1.1])

plt.axhline(y=0.8, color='r', linestyle='--', linewidth=1)

plt.xticks(list(range(1,14)))

plt.xlabel('# of Principal Components')

plt.ylabel('Cumulative Variance Explained')

plt.show()

Conclusion

This article is an introductory walkthrough for theory and application of principal component analysis in Python.

Next steps you can take to explore the benefits of this technique further is to try an apply some machine learning algorithms on original dataset and principal component dataset and compare your accuracy results.

Check out more of my machine learning tutorials.

Feel free to leave comments below if you have any questions or have suggestions for some edits.